Basics: Reverse Proxy & Container Setup for an eCommerce Business

Introduction

Embarking on a technical journey can be intimidating, especially with the diverse skill levels and backgrounds we each bring to the table. As someone who has been in the thick of eCommerce for over a decade, I understand the need to lay a solid foundation so that everyone can follow along as we dive deeper into future content. So, this blog post is for all of us: whether you’re just starting out or you’ve been tinkering with servers for years. Let’s get our hands dirty together, setting up a VPS server with all the trimmings that will take our eCommerce businesses to the next level of technical autonomy.

Let’s get started

DNS and Subdomains: Our Starting Point

Our digital journey starts with the Domain Name System (DNS)—an internet service that translates domain names into IP addresses. We’re going to set up a subdomain, in my own usecase I want this to be ca.m3tam3re.com to act as a dedicated portal for managing containers and services.

The setup process is fairly simple:

- Log in to your domain registrar and navigate to the DNS settings of your domain.

- Add a new ‘A’ record, input the desired subdomain (e.g., ‘ca’), and assign your server’s public IP address to it.

- Set a reasonable TTL

While every domain registrar has their own nooks and crannies, the overarching process is the same: log in, find your DNS settings, and add an A-record for your desired subdomain pointing to your server’s IP. Pro tip: a TTL value of 600 seconds gives us a good blend of quick updates and spreading the word to the internet at large.

Ubuntu and SSH Keys: Locking Down Our Fort

When it comes to choosing an operating system for our server, Ubuntu’s a solid pick. Installing it is typically a matter of a few clicks on most cloud platforms. But what’s even more important is keeping our server secure, and that means using SSH keys instead of passwords. They’re like the VIP pass to our server – no key, no entry.

Here’s the lowdown on setting things up securely:

1. Create SSH key-pair

|

|

Note: During the key generation process, you’ll be asked to enter a file in which to save the key (`Enter file in which to save the key (your_home_path.ssh/id_ed25519):`). You can specify a custom path here, or simply press Enter to accept the default location and file name.

Your keys will typically be stored in your user directory, precisely in `~/.ssh/`. Specifying a filename, such as `cloud-demo`, will create the private key as `~/.ssh/cloud-demo` and the public key as `~/.ssh/cloud-demo.pub`.

After creating your SSH key pair it’s worth noting that most cloud providers offer a nifty feature. They allow you to add your public SSH key right at the moment of setting up your new server. This is incredibly convenient, as it saves you the additional steps of manually transferring and configuring the key after the server is already up and running.

If your cloud provider supports it and you have provided your public key (not the private key!) you could create a new entry in your ~/.ssh/config file:

|

|

This will basically allow you to log into your VPS with SSH by only typing:

|

|

Without the definition of cloud-demo in the config file your login command would look like this:

|

|

If you did not provide your public key to your cloud provider or this is not supported your login command would look something like this and you will be promted for the root password:

|

|

2. User setup on the remote server

The first thing I do is to create a new user with my own username m3tam3re.

|

|

Now I will add the new user to the sudo group which will allow my shiny new user to run commands as superuser. Actually after the initial setup of the server I will probably never use the root user ever again. So let’s add our user to the sudo group:

|

|

Now I will switch to my new user with the following command:

|

|

Now I will create a .ssh folder and set secure permissions:

|

|

After this is done I will type exit to switch from my new user back to root and type exit egain to close my SSH connection.

Back on my local machine I will copy my public key to my remote machine:

|

|

Now I log into my remote server with SSH again:

|

|

I want to be able to login with my private key later on. The SSH server will look for a file named authorized_keys in my .ssh folder. Wth the follwing command I can append the contents of my public keyfile to ~/.ssh/authorized_keys:

|

|

Now I can delete the keyfile:

|

|

🚀 On linux you could make the whole process of copying the public key to the remote server etc. with just one command:

ssh-copyid -i ~/.ssh/cloud-demo.pub m3tam3re@65.21.180.91.

The same applies for MacOS, but as a lot of users will have a Windows system I wanted to make sure this can be reproduced on windows.

3. Make SSH more secure

I want to make sure my shiny new server is secure. So I make some small changes to the SSH server configuration. I want to achieve 3 things:

- Login to the server should only be possible with a key-pair

- Login with passwords should not be possible

- The root user should not be allowed to login over SSH

|

|

There are 3 lines in this file which have to look like this in the end:

|

|

Now I need to restart SSH to apply the changes:

|

|

As mistakes happen I will verify my settings first from another terminal. So do not log out yet!

I a new terminal window on my local machine I will some tests that verify my new settings:

|

|

Since all of this gives the expected results I can go back to my still open remote SSH session and log out with exit.

Remember the SSH config file thing in the beginning. Here I can just change the root user to my username:

|

|

And now I can just login like this:

|

|

4. Enable the Firewall

Understanding the Importance of a Firewall

A server firewall is your digital security guard; it decides which traffic is allowed in and out of your system. Here’s why it’s vital:

- Controls Access: It keeps unauthorized users and harmful data from accessing your server.

- Thwarts Attacks: It helps prevent cyber threats like intrusions and denial-of-service attacks.

- Maintains Privacy: A firewall limits exposure of your data to potential attackers.

- Custom Rules: You can define what’s permissible, tailoring security to your server’s profile.

- Monitors Traffic: It enables you to keep logs of incoming and outgoing traffic for security reviews.

Simply put, a firewall is an essential tool for safeguarding your server against a multitude of online security risks.

Now, let’s proceed to setting up the firewall, empowering your server with this crucial layer of protection.

At first I will allow connections for all 3 of them:

|

|

Now I can enable the firewall and check the status.

|

|

Well done: The server should be secure now 🚀

Install Caddy

Before we dive into the installation, let’s touch on what Caddy is. Caddy is an open-source web server that automates secure web traffic with built-in HTTPS. It’s user-friendly, automatically handles SSL certificates, and serves as a robust platform for web hosting and reverse proxy configurations. With its simplicity and security features, Caddy stands out as a preferred choice for developers and sysadmins looking for a reliable, low-maintenance web server.

There is not very much to installing Caddy. This is just a copy of the official caddy docs. I put it here to spare you from going to the caddy website and copy it from there:

|

|

These command will add package sources from Caddy to my Ubuntu installtion, verify the source with a gpg key and install the Caddy package.

Now we need to edit the Caddy configuration with the Caddyfile. This file is located in /etc/caddy/Caddyfile and requires root access to be edited. We will use nano for editing as this is easier fpr most people (Sorry vim, I still love you)

|

|

Now we enter the follwing into the file:

|

|

|

|

Docker: Our Software Container Maestro

Docker basically lets us wrap our web services in neat little packages we can easily move around and duplicate. It’s effortless scaling and deployment, which is pretty much gold in our line of work.

|

|

Before we move forward with setting up Portainer, let’s take a moment to understand the pieces of technology we’re working with. Docker and Docker Compose are tools that greatly simplify the process of deploying and managing applications.

Docker is a containerization platform that packages an application and its dependencies into a container, ensuring consistency across various computing environments. Think of it as a way to “containerize” your application, isolating it from the system it runs on.

Docker Compose is a tool for defining and running multi-container Docker applications. With a simple YAML file, you outline the components necessary for your application to run, and with a few commands, bring your entire environment to life.

Together, Docker and Docker Compose streamline the process of deploying and managing applications, allowing developers to focus more on building their software rather than worrying about the underlying infrastructure.

Portainer: Keeping an Eye on Our Containers

Portainer’s the wingman to Docker, giving us a sleek interface to manage all those containers. It’s like having a central dashboard where we can click our way through tasks that would normally be a mouthful of commands.

At first we will create a volume for portainer, this is necessary for data to persist between container restarts.

|

|

Now I can start the portainer container with the following command:

|

|

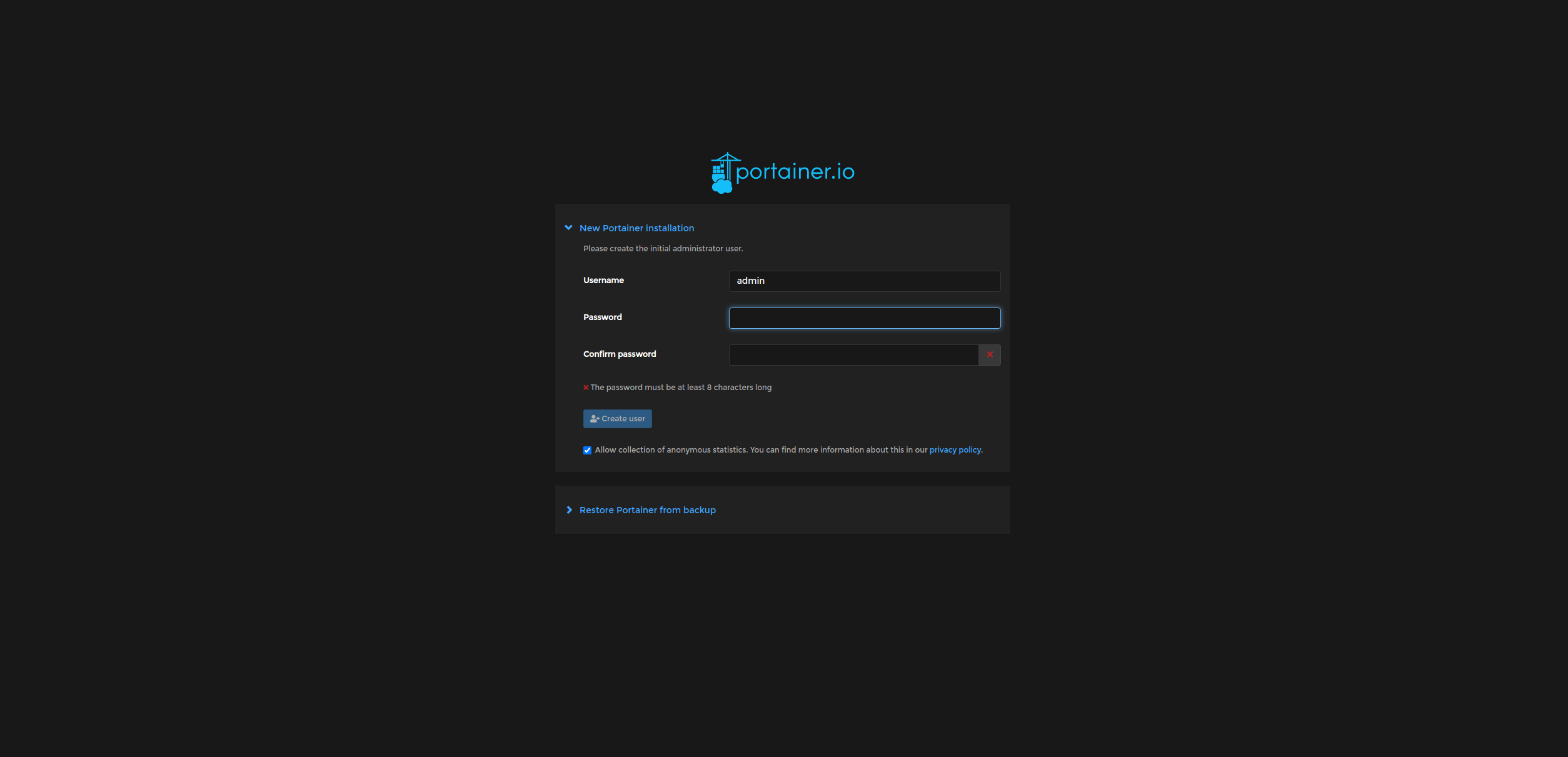

Now, hitting up ca.m3tam3re.com should bring us face to face with the Portainer login screen where we can create a login with a secure password.

Warnings and Cautions: Navigating Potential Pitfalls

While setting up your VPS and configuring services can be a rewarding process, there are a few common pitfalls that you should be aware of to ensure a smooth setup experience. Here are some potential issues and tips on how to avoid them:

Security Comes First

- Never use default passwords: Always change default passwords immediately after installing new software or services on your server. Default passwords are a major security risk.

- Be careful with root access: Mistakes made with root privileges can be catastrophic, so always take a double look before executing commands as root.

SSH Key Configuration

- Don’t mistake private for public keys: When you’re adding your SSH key to a server or a cloud provider panel, ensure you provide your public key (typically `~/.ssh/your_key_name.pub`) and not your private key. Exposing your private key is like giving away the keys to your kingdom.

- File permissions are crucial: The SSH key files should have strict permissions set, and your `.ssh` directory should be set to `700`. Your private key should be `600` and the public key `644`.

DNS Settings

- Propagation time: After adjusting your DNS settings, keep in mind that changes may not be immediate. DNS propagation can take anywhere from a few minutes to 48 hours. Avoid making further changes during this period, as it can cause confusion and extended delays.

Firewall Configuration

- Verify before enabling the firewall: Always ensure that the SSH port (`22` by default) is allowed through the firewall before enabling it. Forgetting to do this could lock you out of your server.

- Minimal ports open: Only open the ports necessary for your operation. Every open port is a potential entry point for attackers.

Docker and Containers

- Keep data persistent: When using Docker containers, remember that the default container filesystem is ephemeral. For any data you wish to persist, make sure to use volumes.

Updates and Maintenance

- Stay updated: Regularly update your server’s software. Outdated applications can have security vulnerabilities that are exploitable.

- Backups: Before making significant changes to your server, back up your data. If something goes wrong, you’ll be relieved to have a recovery point.

Make sure to move slowly through each configuration step, understand the commands you are executing, and double-check configurations before restarting services or the server itself. One small typo could cause a service to fail to start or, worse, lead to server compromise. If ever in doubt, consult the documentation or seek advice from a knowledgeable colleague or the tech community.

Conclusion

To be honest I am still finding out how I would like to handle things. On the one hand it is good to show how I actually do things in my own eCommerce business, on the other hand I want to help the most people possible to learn about technologies that help automate an eCommerce business. For now I have decided to keep things simple and make my content in a way that is hopefully easy to follow. Things will get more complicated down the road anyway.

Recommending Ubuntu, Docker and Portainer is a reasonable choice. The solutions are widely adopted and it is easy to find additional help, tutorials etc. on the internet if somebody get’s stuck or has a problem.